一般的反爬,已经阻止不了老子爬图的决心了!

1、要设置IP代理,你首先得拥有一个代理IP池

这里提供一个,建立自己代理IP池的思路:对网上的代理ip进行爬取,然后存储到文件或数据库中,每次使用时,排除无效ip,并且不断刷新下。保持ip的有效性,给你自己掉用。

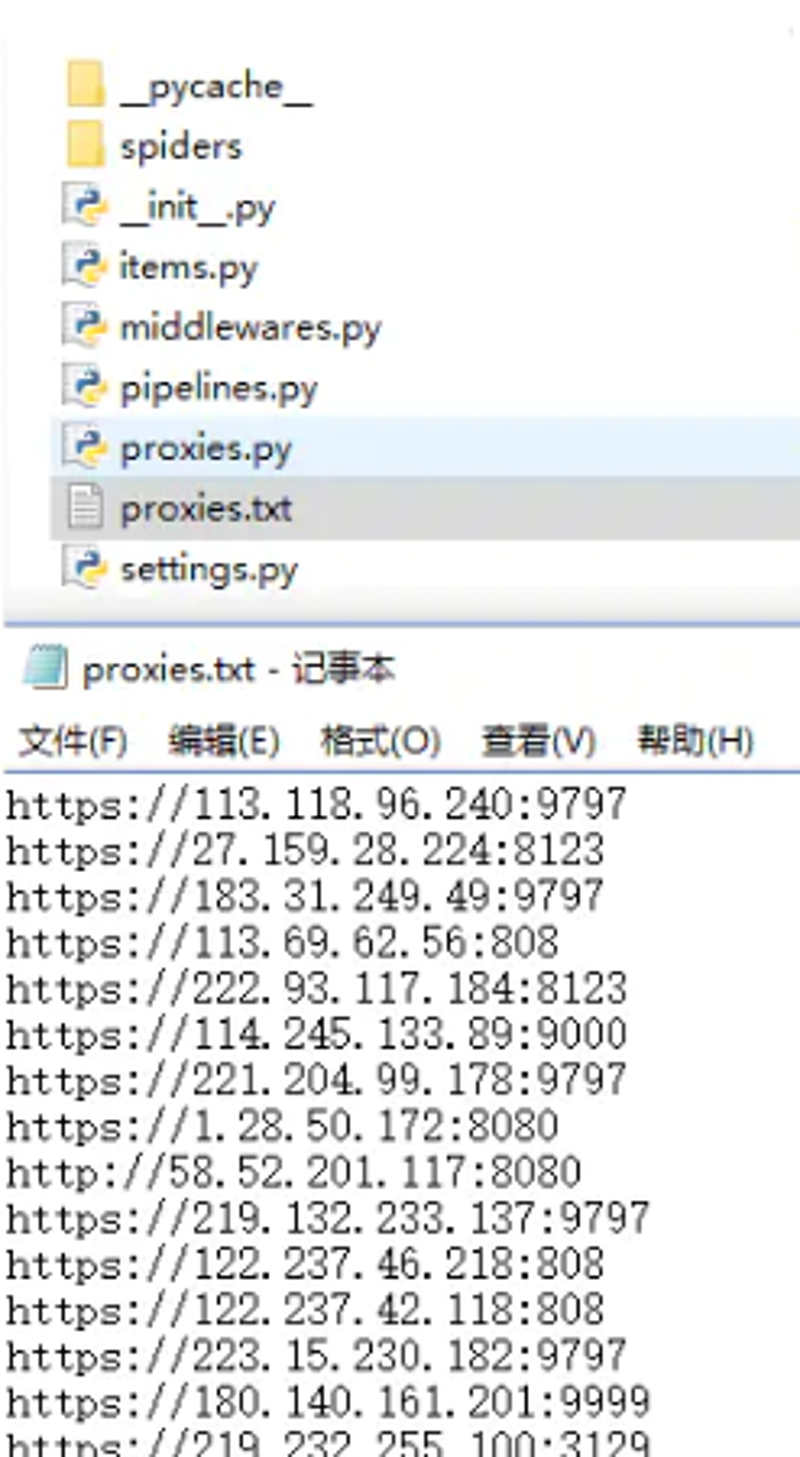

举例:爬取西次代理IP,保存到文件proxies.txt中,提供给后面Scrapy爬虫使用

#!usr/bin/env python

# -*- coding: utf-8 -*-

#!文件类型: python

#!创建时间: 2019/5/22 16:13

#!作者: SongBin

#!文件名称: proxies.py

#!简介:自动更新IP池

# 写个自动获取IP的类proxies.py,执行一下把获取的IP保存到txt文件中去

#!使用方法:执行python proxies.py,这些有效的IP就会保存到proxies.txt文件中去

import requests

from bs4 import BeautifulSoup

import lxml

from multiprocessing import Process, Queue

import random

import json

import time

import requests

class Proxies(object):

"""docstring for Proxies"""

def __init__(self, page=3):

self.proxies = []

self.verify_pro = []

self.page = page

self.headers = {

'Accept': '*/*',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36',

'Accept-Encoding': 'gzip, deflate, sdch',

'Accept-Language': 'zh-CN,zh;q=0.8'

}

self.get_proxies()

self.get_proxies_nn()

def get_proxies(self):

page = random.randint(1, 10)

page_stop = page + self.page

while page < page_stop:

url = 'http://www.xicidaili.com/nt/%d' % page

html = requests.get(url, headers=self.headers).content

soup = BeautifulSoup(html, 'lxml')

ip_list = soup.find(id='ip_list')

for odd in ip_list.find_all(class_='odd'):

protocol = odd.find_all('td')[5].get_text().lower() + '://'

self.proxies.append(protocol + ':'.join([x.get_text() for x in odd.find_all('td')[1:3]]))

page += 1

def get_proxies_nn(self):

page = random.randint(1, 10)

page_stop = page + self.page

while page < page_stop:

url = 'http://www.xicidaili.com/nn/%d' % page

html = requests.get(url, headers=self.headers).content

soup = BeautifulSoup(html, 'lxml')

ip_list = soup.find(id='ip_list')

for odd in ip_list.find_all(class_='odd'):

protocol = odd.find_all('td')[5].get_text().lower() + '://'

self.proxies.append(protocol + ':'.join([x.get_text() for x in odd.find_all('td')[1:3]]))

page += 1

def verify_proxies(self):

# 没验证的代理

old_queue = Queue()

# 验证后的代理

new_queue = Queue()

print('verify proxy........')

works = []

for _ in range(15):

works.append(Process(target=self.verify_one_proxy, args=(old_queue, new_queue)))

for work in works:

work.start()

for proxy in self.proxies:

old_queue.put(proxy)

for work in works:

old_queue.put(0)

for work in works:

work.join()

self.proxies = []

while 1:

try:

self.proxies.append(new_queue.get(timeout=1))

except:

break

print('verify_proxies done!')

def verify_one_proxy(self, old_queue, new_queue):

while 1:

proxy = old_queue.get()

if proxy == 0: break

protocol = 'https' if 'https' in proxy else 'http'

proxies = {protocol: proxy}

try:

if requests.get('http://z1.krsncjdnr.xyz/pw/', proxies=proxies, timeout=2).status_code == 200:

print('success %s' % proxy)

new_queue.put(proxy)

except:

print('fail %s' % proxy)

if __name__ == '__main__':

a = Proxies()

a.verify_proxies()

print(a.proxies)

proxie = a.proxies

with open('../../proxies.txt', 'a') as f:

for proxy in proxie:

f.write(proxy + '\n')

结果:

2、在Scrapy爬虫中使用代理IP

在中间件middlewares.py中添加:

#IP代理池

class ProxyMiddleWare(object):

"""docstring for ProxyMiddleWare"""

def process_request(self, request, spider):

'''对request对象加上proxy'''

proxy = self.get_random_proxy()

print("this is request ip:" + proxy)

request.meta['proxy'] = proxy

def process_response(self, request, response, spider):

'''对返回的response处理'''

# 如果返回的response状态不是200,重新生成当前request对象

if response.status != 200:

proxy = self.get_random_proxy()

print("this is response ip:" + proxy)

# 对当前reque加上代理

request.meta['proxy'] = proxy

return request

return response

def get_random_proxy(self):

'''随机从文件中读取proxy'''

while 1:

with open('proxies.txt', 'r') as f:

proxies = f.readlines()

if proxies:

break

else:

time.sleep(1)

proxy = random.choice(proxies).strip()

return proxy修改下settings文件

DOWNLOADER_MIDDLEWARES = {

'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 100,

'bt1024.middlewares.ProxyMiddleWare': 110,

'bt1024.middlewares.Bt1024DownloaderMiddleware': 534,

}scrapy会自动启用proxies.txt中代理IP列表里的代理IP。

相关推荐

不要着急,慢慢更新

scrapy爬虫启示录-小伙子老夫看你血气方刚这本《爬虫秘录》就传给你了

更多内容请访问:IT源点

注意:本文归作者所有,未经作者允许,不得转载